Exploring the Hype Cycle of AI

Right now, we are in the Trough of Disillusionment when it comes to AI. Sounds depressing, doesn’t it?

But what is the Trough of Disillusionment? Let’s start there.

The Trough of Disillusionment is considered the third stage of the Hype Cycle, which is a conceptual cycle describing the rise, fall, and eventual embrace of various technologies.

During the first two stages, the technology trigger and its rapid innovation result in a peak of inflated expectations. More than once in the last year, unless you truly do not consume any online material, you’ve heard or read a claim, statement, or promise that AI is going to change every aspect of our society. It will make everyone healthy, wealthier, and wiser. It will increase our productivity many times over, and we’ll all have more time to relax and pursue other dreams. The proponents of AI predict that this utopia is just around the corner with some thinking it will be here before the end of the decade. When people say AI is bigger than the internet and the smartphone revolution combined, they are clearly at this apex of inflated expectations. Even if their prognosis comes to pass, they certainly possess a lofty perspective on the impact of AI. There are definitely naysayers and concurrently others who decry the dangers of AI, but overall, our society has been collectively climbing up to this peak for a couple of years, especially since the release of ChatGPT in November 2022.

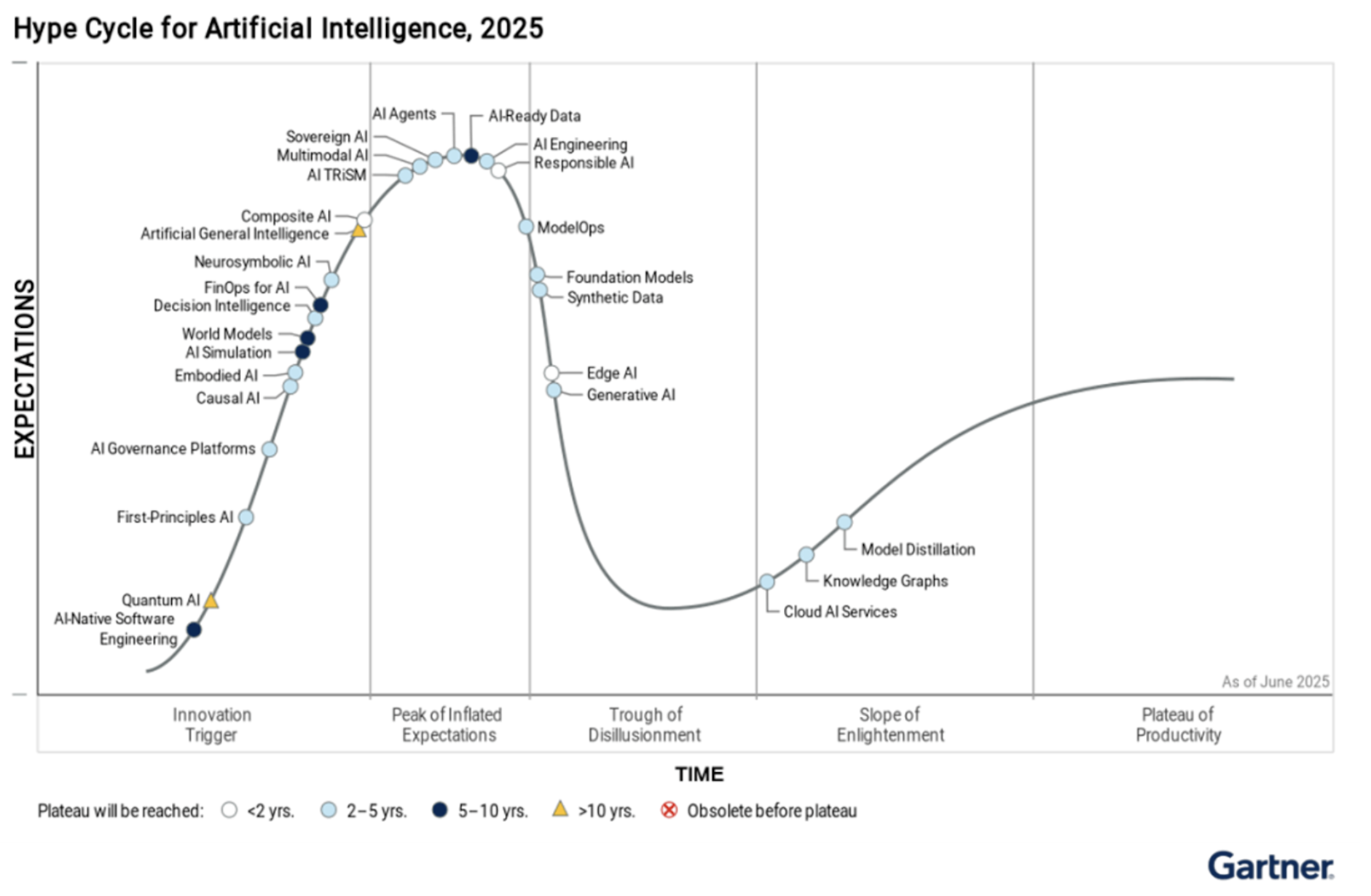

This peak of inflated expectations is shown in the following figure, published by Gartner, a research firm that generates hype curves for various technologies, among other things.

Figure 1: In this image, produced in June 2025, one can see the Trough of Disillusionment in the lower middle, which looks conspicuously empty [1]. Since then, a number of reports and comments have popped up quenching some of the hopes and dreams that came from the top of the peak.

For example, just one month later, in July 2025, a report by MIT titled “The GenAI Divide: State of AI in Business 2025” revealed that 95% of companies are failing to see meaningful results from their AI projects in terms of profit or reducing costs [2]. Of course, this abysmal statistic might simply be that the expertise to properly apply AI is still rare, and the skills have yet to be acquired by employees in many different industries. Regardless, we’ve clearly dropped or fallen from the peak to this trough, where we’ve realized AI might not be the catch-all solution we were told it was a year ago. At least right now.

Anecdotally, many of my colleagues and students share common sentiments that errors, misunderstandings, and hallucinations generated by these LLM systems are reducing the LLMs’ credibility as comprehensive tools for engineering and design work. Although these algorithms can be correct many times during an AI session, the frequency of errors can be high enough that a user starts to question the other answers. Furthermore, they will likely never be 100 percent correct [3]. And that’s a big barrier to full adoption with full confidence.

As an aside, this is dangerous because a novice might inappropriately believe everything an AI platform produces is correct, while an expert can identify where it goes wrong more often. Thus, it is my opinion that the younger generation believes LLMs are more reliable than the older generation, not because the older generation is smarter, but because they’ve seen more questions and answers over their longer lifetimes and can draw from a larger base of facts.

Another major criticism, as discussed in the MIT report, is that these systems don’t really learn. Yes, they can be retrained into a better model, but that takes time, data, money, and effort, and quite often, individuals aren’t willing or capable of improving the models to a level that can accurately meet the needs of their business processes. In their defense, the creators have never promised such accuracy and capability. Still, we have bought into the promises and now have all been left a little deflated when our prompts didn’t return what we thought they should. We really are all disillusioned, at least in part.

Even Sam Altman, the CEO of OpenAI, the company that developed ChatGPT, recently said that he’s starting to believe the “Dead Internet Theory [4],” which essentially describes how the vast majority of the internet will be garbage and generated by AI agents, bots, and other automated algorithms, reducing the usefulness of the internet to everyone.

That sounds a lot like the Trough of Disillusionment.

The good news is that some (but not all) technologies cross this deep valley and start climbing up the slope of enlightenment to the plateau of productivity.

As a researcher, I’m quite bullish on this prospect. I am old enough to have lived without the internet, and, when I did gain access, to have watched the internet go from dial-up speeds, where an image took a minute or more to load, to streaming videos, networks of people in the billions, productivity algorithms, and an endless trove of material to learn, study, and do research. But it wasn’t always so useful (and as mentioned, maybe the internet is becoming less useful by the minute). The internet went through stages of quick growth and sometimes stages of overhype or disillusionment, when its potential seemed overstated and its flaws more visible than its benefits, especially with the onslaught of password breaches, spamming, phishing scams, and virus proliferation. Yet, with patience, innovation, and thoughtful integration, it eventually reshaped nearly every field of human activity which is something I expect artificial intelligence will start doing… but not right away. It will have to climb back up the slope of enlightenment.

My first phone that could take pictures had a resolution of around 640x480 pixels. My children won’t even look at those early images as if it’s an insult to their eyes. But they clearly have no restraint in taking thousands (yes, literally thousands in an hour using burst mode) with modern smartphones when every picture is many megabytes in size.

I bring up this analogy because the AI models we have today are the worst ones that will exist in the future. They can only get better. We will likely laugh at how slow and inaccurate they were back in 2025. Already people don’t use AI models from two years ago. For example, what makes us think we’ll use GPT 5 in 2027?

But to get to that future, there will have to be some methodical investment and some patience as well. There will likely be one step back for every two steps forward from time to time. There will be casualties along the way. And these casualties will come in various forms. Many of them will be jobs. People will have to upskill or change professions, hopefully into new and more fulfilling roles [5]. Some of the casualties might be embraced, such as eliminating wasteful business processes or removing the need for dangerous operations by humans who are more prone to diving tired or intoxicated. Unfortunately, some real human casualties will be the most devastating to witness. Despite its benefit to humankind, technology has always been abused by a few to cause emotional, financial, and even physical harm to others. I can only hope that we can minimize the casualties in this last category as we reach the plateau of productivity. But I believe we can.

REferences

[1] Gartner. “Gartner Hype Cycle Identifies Top AI Innovations in 2025.” Gartner Newsroom, 5 Aug. 2025, www.gartner.com/en/newsroom/press-releases/2025-08-05-gartner-hype-cycle-identifies-top-ai-innovations-in-2025.

[2] MIT NANDA. The GenAI Divide: State of AI in Business 2025. July 2025. MLQ, mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf.

[3] Swain, Gyana. “OpenAI Admits AI Hallucinations Are Mathematically Inevitable, Not Just Engineering Flaws.” Computerworld, 18 Sept. 2025, www.computerworld.com/article/4059383/openai-admits-ai-hallucinations-are-mathematically-inevitable-not-just-engineering-flaws.html.

[4] Ostrovsky, Nikita. “What to Know About the ‘Dead Internet’ Theory—and Why It’s Spreading.” TIME, 2025, time.com/7316046/sam-altman-dead-internet-theory/.

[5] Duke, Sue. “AI Is Changing Work — the Time Is Now for Strategic Upskilling.” World Economic Forum, 4 Apr. 2025,www.weforum.org/stories/2025/04/linkedin-strategic-upskilling-ai-workplace-changes/.

To cite this article:

Salmon, John. “Exploring the Hype Cycle of AI.” The BYU Design Review, 29 September 2025, https://www.designreview.byu.edu/collections/exploring-the-hype-cycle-of-ai.